An edited transcript of a talk I gave at the 2016 Swift Summit conference, in San Francisco, CA (video, slides).

In this talk about conversational user interfaces, I used Swift to build and demo a voice chatbot, with a personality based on the 17th century moral writer, François de La Rochefoucauld. In 2016, chatbot hype far outran AI capabilities but design still created interesting opportunities. Now, with OpenAI’s ChatGPT, and Anthropic’s Claudewe have amazing AI capabilities. But are we designing for it yet? Give it time.

Hi, everyone. I’d like to tell you about Talking to Swift, but to do that we need to consider the state of talking to computers in general.

And whenever I think about talking to computers, the first thing I think about is Star Trek IV.

I don’t know if any of you remember this. It’s often known as “the one with the whales.”

It’s 300 years in the future and there are aliens attacking Earth. The crew of the Enterprise needs to save the Earth, like they always do.

But to do this they need to come back in time to 1986, to San Francisco, to a point actually just a few miles north of where we are now, where the Golden Gate Bridge is, and they need to collect a pair of blue whales to bring them back to the future.

But this is hard because whales are heavy!

One of the ways they figure out that they can do this is if Scotty, the engineer of the Enterprise, can talk to an engineer in 1986 and explain to him — convince him — how to make transparent aluminum, a not-yet-invented material from the future which is strong enough to transport the whales.

It’s tricky, right? How do you communicate the formula for transparent aluminum and convince someone it’s going to work?

So Scotty sits down with the engineering professor at his 1986 Macintosh Plus and has this interaction:

McCoy: Perhaps the professor could use your computer?

Engineer: Please.

Scotty (speaking into the mouse): Computer? Computer. Hello, computer.

Engineer: Just use the keyboard.

Scotty: The keyboard, how quaint.

What I love about this is that the joke works two ways now.

Because back then when this movie came out, the joke was, “Silly Scotty, don’t you know, of course, you can’t talk to computers. That’s crazy.”

But when we watch it now, I feel like the joke still works because now we feel, “Silly Scotty, of course you can talk to computers, but you’re going to want to just use the keyboard anyway.”

So this joke has moved through time, but remained funny in different ways.

It’s an interesting question: why is it still funny? Why don’t we expect to talk to our computers?

Because if you think about it, Captain Picard has a 13-inch iPad Pro, like I do.

Quark in Deep Space Nine clearly takes Apple Pay with Touch ID.

So in all these respects, it feels like we should be there with talking to computers.

And if you were listening to the news earlier this year, there was a lot of talk about bots and artificial intelligence and messaging. These ideas, these phrases, were all over the media.

And I thought, “Well, this is very exciting. I worked with computers. Bots are here. We’re going to be talking to our computer soon.

“I work in tech, I’m making apps. If bots are the next new thing, I want to start making bots!

“I want to find out how to do that.”

That’s what got me thinking about conversational user interfaces — conversational UI — and trying to figure out exactly what that would mean.

What I want to do in this talk is:

-

First of all, just offer my opinion on what it is.

What was everyone talking about when they talk about conversational UI? Why was all this stuff in the news? What’s real? What’s not real? This part is a background survey.

-

Second, given the bits that are real, I’ll say something about: how do we design it?

How do you design a conversational UI?

We know something about how to design apps, visual interfaces, but how do we design a conversational UI?

-

And finally, how do we build it with Swift?

What can we make with the tools in front of us right now?

So what is it?

There were actually a few different things all swirling around and getting lumped together when people were talking about bots and messaging and chatbots earlier in the year.

One of those is just the rise of messaging.

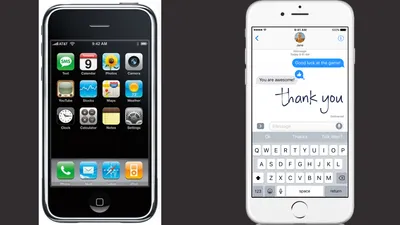

One simple measure of the rise of messaging is to think how much more complex garden variety messaging applications have gotten over the last few years.

The original iPhone OS didn’t have iMessages.

— It just had an SMS app, because all you could do was send SMS messages, just plain text. But now you’ve got —I need to check my notes here— emojis, images, videos, sounds, URLs, handwriting, stickers, tap backs, and apps, full-blown interactive apps that you can send someone in messaging.

You can even get floating balloons and lasers and confetti.

Which sounds like it would be a joke if I said it a few years ago, “You’re going to be able to get confetti in your messages.”

You wouldn’t think that was real, but it’s totally real. Confetti is here.

But less prosaically, I’d say there’s been a rise in messaging, not just in that we have richer media for messaging between people, but in that the messaging UI, the message thread, is being used for purposes beyond that.

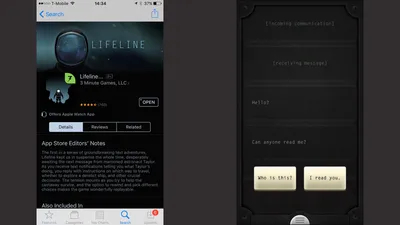

One example is this great app called Lifeline.

Lifeline’s a game, and you play this game just by communicating with an astronaut. But you communicate with the astronaut only through text messages.

You can see that UI on the right there. It looks like the threaded messaging UI that you’d expect from TweetBot or from iMessages. It’s restricting the responses you can give, but the essential interaction model is the interaction model of a messaging app.

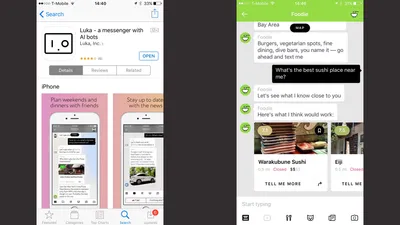

Now, more ambitiously, there’s this app here.

This app is Luka, and it provides a messaging-like interface to different bots to do different kinds of service-discovery. If you’re looking for a restaurant, you can ask for restaurants and then it’ll give you an answer and you can ask a little bit more.

It’s not just restaurants, the tabs along the bottom indicate different sorts of queries you can make. You can ask it for simple math calculations.

This app’s quite interesting. Usually it does offer this guidance in the bottom about what you can input, but it does also offer you some capacity for freeform text communication to solve a problem. And, theoretically, this app is exactly on trend when people talk about the rise of chatbots and messaging and messages as delivery service.

But actually, I think apps like this are quite rare.

There’s this one and maybe one other. My imppression is that they are not taking off or working quite as well as one would ideally hope.

But however well the systems work, I would say that this idea is one of the trends we’re seeing — the rise of the messaging thread as a new, evolving interaction model, which is being used for more diverse purposes, for commerce, for communicating not just with people, but also with software.

The second trend is genuine chatbots, like Luka.

And by chatbot, I mean extended natural language chat with a software agent. So not just one message and you’re done, but back and forth a little bit. Maybe even a talk about life — just a wandering chat.

Now, the dream of that is not new at all. This is an idea we’ve had for a while.

You could have long chats with HAL in 2001.

HAL might or might not open the pod bay door, but you can go back and forth with him and he follows the discussion.

Also, famously there’s this guy. He is very goal-oriented but he can hold a conversation.

In a more friendly vein, I don’t know how many of you here recognize KITT. That’s the car from Knight Rider starring David Duchovny as Michael Knight.

KITT would help Michael fight crime.

But then they’d also have little chats, and KITT would make fun of him for hitting on women or whatever.

They were buddies. They had a certain rapport with each other.

So the dream is not a new.

And I’d say also, when you start digging into it, you find the reality_ is not new either. People have been making chatbots or trying to make chatbots as long as there were keyboards.

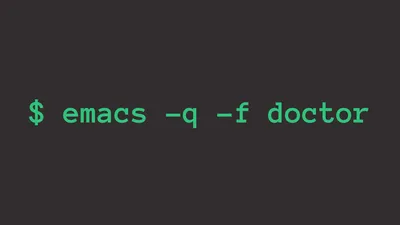

In fact, there’s a chatbot pre-installed on all of your Macs right now!

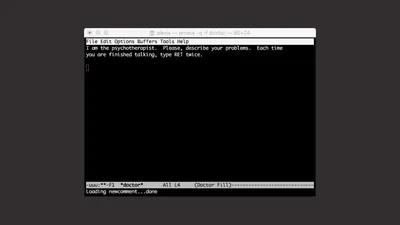

This chatbot provides a simulation of a psychotherapist.

If you all open your terminal, and run Emacs with the command emacs -q -f doctor, you get ELIZA, a Rogerian psychotherapist.

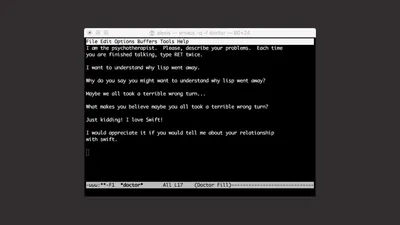

You can talk back and forth with ELIZA.

Maybe you get frustrated trying to debug your e-lisp code? You can get in there and get a little consolation from ELIZA!

You can say things, ELIZA replies, goes back and forth. It looks at the language you’ve used and repeats back bits of your language.

The first time this sort of thing came out, it was very impressive for people. Now we’re more likely to recognize it as a bit of a toy. But the point is, if you go into your Emacs and look at the source code there, it’s copyrighted 1985. And in the comment header, they’re talking already about this being “classic pseudo AI.”

Classic because the original ELIZA code is from 1966!

This is quite old stuff.

So what’s new now that we’d suddenly be talking about chatbots?

I think one thing that’s new vs 1966 is that we’re actually using them.

We’re actually having these interactions with functional products. Siri, obviously, is on all the Apple platforms. Google’s assistant. Amazon Alexa assistant, which you can access with the Echo device and the Dot device and also through their API. There are also directory services for television like Xfinity S1.

If you think about it, even things like the Slackbot are little bits of a chat-like interface. When you sign up for a new Slack team, they always ask you for your name again, and it’s always the Slackbot chatting away at you to get this stuff.

So one change is that now we’re really using chat bots, even if actually they’re not that new.

But, there’s another take on “we really use them.”

This is that we’ve been using chatbot-like things for a while … and hating them!

Consider all your interactions with a phone tree. That’s a chatbot and it’s rather terrible.

Or if you’ve ever tried interacting with an automated customer service agent, just by typing text into a website, that’s a chat bot. That’s never been a good experience.

We’ve been using these things for a while. They’re not so new that it’s easy to see what the new excitement is about.

I know there’s a lot of interesting work happening in this area, but I looked around and looked around and I couldn’t find one bot with which I could have a long extended conversation that seemed natural.

I don’t think there’s any magic new thing that’s arrived, as near as I can tell.

It’s worth saying a word about SiriKit, which Apple released at the last WWDC.

When Apple released SiriKit, I was very excited. I wanted something like SiriKit. And then I looked at SiriKit and I was thought, “Oh, they didn’t really give us the real thing.”

Part of me had in mind that when they released SiriKit, SiriKit would be like HAL. They’d have the new magic thing that I could configure and it would understand all sorts of interactions. That would be the real thing.

But SiriKit does not have HAL inside.

SiriKit has an API inside with a predefined set of commands. (Don’t call them commands, call them intents!) And then some rules that Apple manages about how utterances (which just means “what people say”) get mapped to intents.

And you can configure it for your own service, if it’s one of the supported service types, and you can specify certain variable slots that get filled based on the utterances.

In other words, when you look at it, it just looks like a computer program! There was no magic in there. And I came to realize in thinking about it, well, of course. Of course that’s what it had to be.

Nobody knows how to make HAL. It was silly of me to expect otherwise.

And SiriKit is quite good, but this is all there is in terms of chatbots.

Given this, I suggest a new name to understand chatbots and understand this trend. Every time you see a chatbot, you should just think of it as a restricted domain bot, because they’re good within a restricted domain.

But I don’t think there are any generalized chatbots.

Last trend, before I wrap up my trend survey and get into the nuts and bolts here, is voice.

One of the things that a lot of the new services have in common is that they’re all actually using voice in some way.

So obviously, there’s Siri. I use Siri multiple times every day, usually when I’m in the car and it works great. I use it for text transcription, for the basic set of commands where I know it can respond, and it’s quite good.

Also, there’s the Amazon Echo device. That works really well, presumably because it has dedicated hardware.

It’s got seven microphones on the top that allow it to detect a voice and know where it’s coming from and focus only on that voice.

And last, I think yesterday, or on Friday, Google released their own home assistant, which is going to be like Amazon Echo, with a microphone always listening to you, ready to help in some way.

So why is this happening now?

In this area, I think there is a technical reason for it.

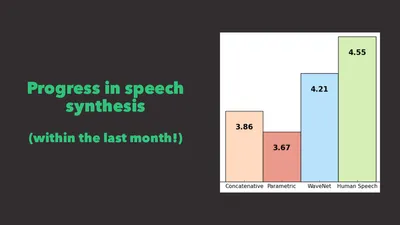

We’re living through a moment where there’s significant progress in speech recognition.

For instance, just within the last month, Microsoft announced that they beat a record that had previously only been reached by humans, on ability to accurately recognize speech in this large corpus of recorded telephone conversation data. That’s impressive. That’s a genuine advance.

And also just within the last month, Google announced having made significant advances in speech synthesis — not beating human synthesis, but doing a lot better than past efforts.

And if you look at both of these works (these are the PDFs), what you see that they have in common is they’re all using neural networks.

They’re using new kinds of neural networks — recurrent neural networks, convolutional neural networks, deep learning neural networks.

So if you look at all these trends, there is actually something happening around voice, and it’s being driven by progress in the use of neural networks.

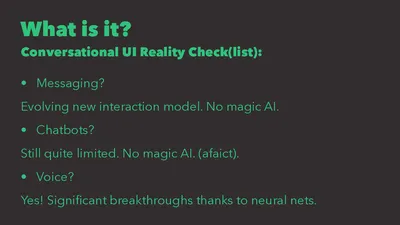

Just to quickly recap on the question of “what is it really?” What is conversational UI?

-

Messaging? There’s an evolving interaction model which is kind of interesting, but there’s no magic AI there.

-

Chatbots? They’re still quite limited as near as I can tell. There’s no magic either, as far as I can tell. I know some people are trying to use neural networks for that. But it hasn’t gotten that far yet.

- Voice? Yes. There’s definitely something happening there and it’s happening due to neural networks.

So voice is what you have to work with. But if you want to do a chatbot, you need to be very restricted about the domain.

That’s my takeaway on the reality of the situation right now.

My theory on how this stuff happens is that there’s a game of telephone. Somewhere, in a room, people are doing work with neural networks and machine learning and they’re making real breakthroughs. And then they tell people down the hall, people who don’t know exactly what they’re working on, “Hey, we’re making breakthroughs on machine learning!”

And those people go, “Hey, they’re making breakthroughs in AI, related to voice and image recognition!”

And then they go down the hall and eventually you’re getting to very non-technical people in the wider media or in the investor community.

And by the time this game of telephone goes all the way out there to the media, it gets turned into, “AI is happening right now!”

That’s when you get all the hype around the idea that you can talk to computers. When really, the progress is more circumscribed.

But that’s my takeaway on what’s actually there right now: real improvements in voice, nothing magical around chatbots, and messaging is kind of interesting.

Okay. Let’s get practical. How do you design for it? How do you think about designing an interface for voice?

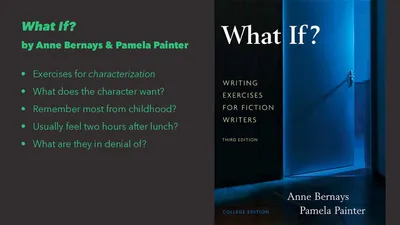

I think the first step is developing a character, like a character in a play.

You’re not writing the operating system, so you don’t need to be completely neutral and boring the way Siri or Google needs to be. You can be more defined in your design, more specific, just in the way that you would be when you’re designing an app. You don’t need to look plain vanilla.

So if you’re developing an assistant, think about three kinds of assistants here.

These are all assistants, but they have very different takes. We’ve got Bruce Wayne’s butler, Alfred — sort of reserved British accent, worried about Bruce. C-3PO — eager, verbose, panicky, a bit feckless. Elle Wood from Legally Blonde — she’s outgoing, she has this valley girl dialect, super optimistic.

You could imagine making three different interfaces that were like these characters.

This exercise of design seems a bit like the exercise of writing.

One way to think about it would be if you were writing a novel and you wanted to develop more specifically your idea of what your character was like. There are books full of exercises that give advice on how to think about that. I think these exercises are quite appropriate for thinking about the design of a voice UI. It’s a domain where design is a kind of writing.

Then the other aspect of it, because of the limitations of the chatbot tech, is you need to restrict the domain. You need to say what this bot can actually do and make it something clear so people aren’t frustrated when they hit the limits.

You can’t promise to be a general purpose assistant when you’re just a thing that controls light switches and timers and can tell you what the date is.

I think that’s especially true if you’re offering a pure voice interface. Because in a way, a pure voice UI is the exact opposite of the message thread. Although they’re both lumped together as conversational UI, they’re actually the opposite of each other.

The message thread is kind of magical. It’s even better than a normal UI because it gives you history, which normally UI doesn’t.

It also tells you about identity. It tells you who said what when. Was this me? Was this the software agent? Was this another person?

It supports rich media, not just text. You can have images, and interactive things.

It guides input, because you can have controls at the bottom that say what can be entered here, so it’s not just open-ended and freeform.

Voice is exactly the opposite. There’s no history, there’s no identity. You don’t know who said what, because you can’t tell the voices apart. It’s only for text. And it’s completely freeform — it’s hard to give guidance about what can be said.

I think this is what leads to a lot of the frustrations with the voice-based interfaces of the assistants we have now. You need to restrict, especially when you’re working only with voice.

Let’s get into an example. I chose a character that already exists — François, the Duke of Rochefoucauld. And his domain is the sorrows of life.

He’s really easy to write because he’s a real guy. François VI, Duc de la Rouchefoucauld, Prince de Marcillac, born 1613, died 1680. He was a wealthy, noble, good-looking fellow. He wrote in the 17th century.

He was also, despite being born very fortunate, unlucky. He had a marriage that he wasn’t very happy with. He was exiled from Paris at one point. He was libeled. He was shot through the eye.

Looked a bit like this.

If you had to characterize his temperament, it’s probably affected by those facts.

This was his ancestral seat, which I think the Rochefoucaulds still live in. So he didn’t have it so bad!

But he did have these sorrows.

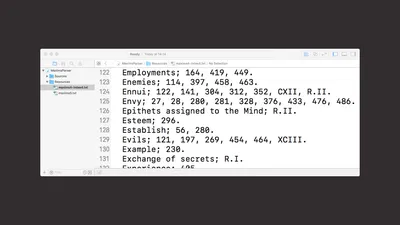

He wrote this great book called the Maxims.

The Maxims is a book of epigrams, of aphorisms, short little witty statements about life, and they cover everything.

Some examples — they cover love, ambition, self-delusion.

Here I just started going through the E’s in the index — ennui, envy, esteem, evils, the exchange of secrets.

If you had to characterize what he’s like as a character, you’d say cynical. He’s worldly wise, he’s witty. His domain is life’s sorrows, things that might make you unhappy, things that make you reflect on the soul.

Just to give you a taste for this guy because I like him so much, here’s one of his more famous epigrams:

We all have strength enough to bear the misfortunes of others.

It’s always the end that has that bit of a sting. Other people’s problems, they’re always pretty easy to bear.

Or another one:

To say that one never flirts is in itself a form of flirtation.

Not many modern philosophers are giving you advice on flirtation. I think it’s good, therefore, to go back in the centuries when you need that sort of thing.

He’s pthe kind of guy who’d probably be very, very popular on Twitter right now until he said something that was a little too out there, and then he’d be very unpopular. Maybe he’d get shot through the eye.

So how do we build it? Let’s get to brass tacks.

First step, we take the project Gutenberg, copyright unencumbered text of Rochefoucauld’s epigrams and we load it into a Swift playground.

We have all the epigrams in here, and then we also have here the index, which nicely gives you the theme associated with every one of the epigrams.

We’re going to need it to talk. Talking is actually the easy part in iOS. We can do that by importing AVFoundation.

Because AVFoundation provides a speech synthesis API!

There’s really not much to say about it. It’s much more flexible than what I show here with the slide. You can have it speak in French, speak a little faster than normal, speak at a higher pitch than normal, but basically you create a synthesizer and then you create an utterance object which contains text.

And then you tell the synthesizer to speak the utterance object, and it does.

And when it’s done, it’ll give you a call back on your delegate.

There are a lot of different voices available to you — men, women, different accents, different languages.

If you just configure it by default like this, you get the default locale, which in this case is English. That’s what we’re going to use to get Rochefoucauld talking.

Now, we also need to understand what Rochefoucauld’s saying.

And for that we need to import Speech.

This is the speech recognition API that was introduced in iOS 10.

There wasn’t actually an onstage WWDC session about this, but there’s sort of a secret online-only session. It’s session 509. It’s 15 minutes long, it’s very clear, and they have pretty good sample code.

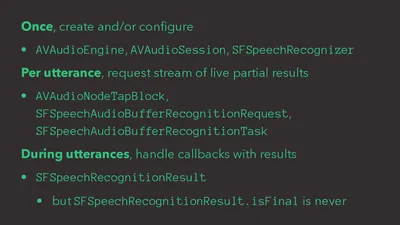

This is the essence of the interaction.

-

Only once, you need to create some armature. It’s going to be around for the lifetime of your app.

AVAaudioEngine,AVAudioSession,SFSpeechRecognizer— we need to configure those. -

Then, for every single utterance that you want to recognize, you create a request, a recognition request object, which you’ll use to create the recognition task, which is what the speech recognition system is going to use to keep track of that utterance’s recognition while it’s in progress. You also need to set up some wiring to connect the AV system so the audio buffers that are coming in from the AV system get sent to the speech recognizer.

- Then, during the utterance, you get these callbacks. And every callback delivers a

SFSpeechRecognitionResult. But the one fly in the ointment here, which I should say a little bit about, is that theSFSpeechRecognitionResult.isFinalproperty is never actually true. That is, it never says it’s done!

In other words, you have a problem regarding what is called endpointing — recognizing when an utterance is finished.

But it’s not that hard to solve.

The solution is to use NSoperations — or now, just Operations.

Specifically, you can define an asynchronous operation.

With this asynchronous operation, you can wrap up all of the work of recognizing a single utterance. And that operation can be defined so that every time one of these callbacks comes back saying, “I recognize a bit more. I recognize a bit more,” it restarts a timer.

And then the moment the timer has been able to go for, say, three seconds without a recognition, then you know you’ve reached the end of that utterance and you can shut down that operation and mark it done.

In other words, you create a time to define an endpoint after a certain period of time wth no recongition.

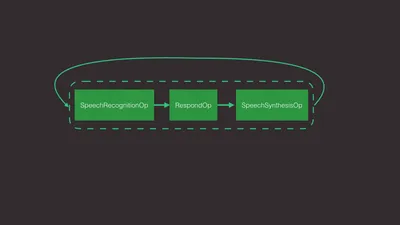

Then the back and forth of a chat is just one operation queue. And these three operations run in sequence over and over again. Recognize, generate the response, speak the response, recognize again.

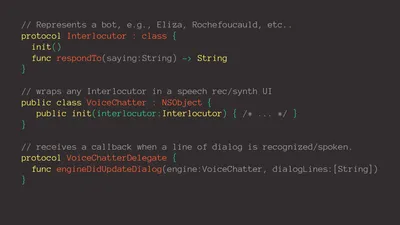

Once you have that, then you can create this much more general infrastructure.

At the top is a protocol called Interlocutor, and that’s just any object that takes something that someone said, and then responds by saying something else.

Then you can feed anything that implements Interlocutor to VoiceChatter. And VoiceChatter just wraps it in a voice UI.

Now, for my case, I’m going to simulate Rochefoucauld. So I’m going to have a few questions and answers that the bot asks, and then afterwards it’ll deliver a randomly chosen quotation appropriate for those questions and answers from Rochefoucauld.

That’s our our Rochefoucauld simulation.

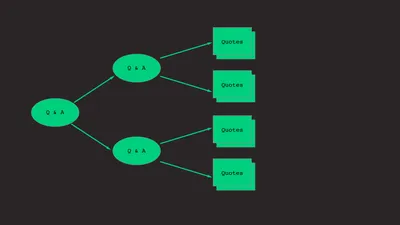

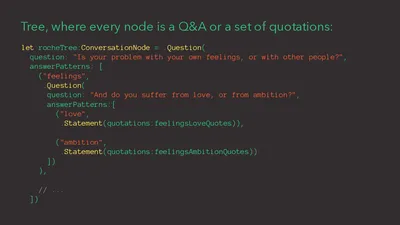

So as a simple base for it, the tree of possible conversations is just represented by this one tree object using a type called ConversationNode. Every conversation node is either a question and answer pair or a final statement from Rochefoudcauld.

How does that end up? You get something like this.

Alexis: Rochefoucauld was a cynical old Frenchman and he’s full of interesting wisdom. And you can talk to him on your phone. Hello.

Rochefoucauld: Is your problem with your own feelings or with other people?

Alexis: My problem is with my own feelings.

Rochefoucauld: And do you suffer from love or from ambition?

Alexis: I suffer from love.

Rochefoucauld: Envy is destroyed by true friendship, flirtation by true love.

Alexis: Thank you for that.

Isn’t that great? We’re talking to Rochefoucauld on iOS 10 on our phone.

Because we have this general protocol oriented design, you can plug other things in. For instance, you can take the old ELIZA code, which someone ported to Objective C very fortunately.

Alexis: So who’s ELIZA? ELIZA is one of the first chatbots. She’s a rogerian psychotherapist who was product of 1960s AI culture. And with iOS 10 stuff, you can get her working in your phone. Hello.

Eliza: Hi, I’m ELIZA. What do you want to talk about?

Alexis: I want to talk about Swift.

Eliza: Why do you want to talk about Swift?

Alexis: Swift makes me happy.

Eliza: That’s interesting.

So there we go. We also have ELIZA. All you need to do is create an object that takes texts, gives you a text out, and now you can talk to it.

You could even, if you wanted to, wrap it up in message bubbles, maybe using Jesse Squires’s awesome, MessageBubbleViewController. Then it would look like a message UI.

But, we want to do more than this!

Because now Swift runs on Linux, so Swift can go anywhere.

I’m going to go just a little bit over my time for a few minutes, but I’ll try to race through this bit quickly, because I just I love it and I think it’s so neat.

I also wanted to get Swift working on the Amazon Echo.

Now you can’t just import Alexa, unfortunately. Amazon is not that helpful just yet.

Alexa is what you use when you want to program for the Amazon Echo, and the Alexa service doesn’t work that way.

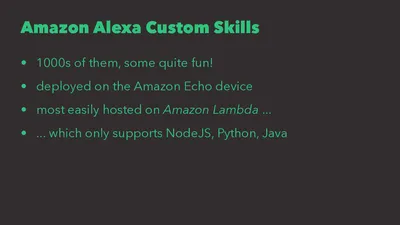

You need to install an Alexa custom skill on your Echo. There’s thousands that you can install.

A lot of them are little trivia games. There’s a really nice one that’s a simulation of you being a detective trying to solve the murder of Batman’s parents. There’s a lot of cool stuff.

The easiest way to deploy an Amazon Alexa custom skill is by using the Amazon Lambda service. Amazon Lambda is a serverless hosting service.

So basically, instead of defining a whole app and then running it somewhere, you just define a function and you upload that function to Amazon, and they take care of calling the function for you and scaling it and connecting it to your Echo, if that’s what you want.

Unfortunately, Amazon Lambda only supports Node and Python and Java. It doesn’t support Swift.

So how am I going to run Swift on the Amazon Echo?

Very sad.

But then I thought, “this is for Swift Summit!” It’s a challenge.

Why do it? Because the summit is there.

I want to put Swift at the top of the Swift Summit. Can it be done?

And it can be done!

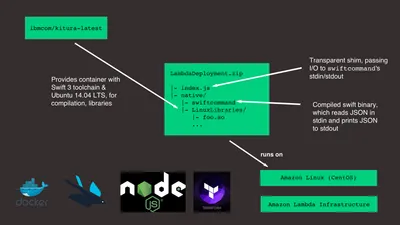

There’s a loophole in the system, which is that the Amazon Lambda function you define (although it’s written in say Node JS), is allowed to include a executable binary.

So the secret here is you just define a Lambda function, which is a little bit of JavaScript code, and that JavaScript code just passes its input into your Swift executable and the Swift executable passes its output out, and that goes out through the JavaScript again into Lambda through Alexa, and on to the Echo.

I won’t talk too much about the bits and pieces of how it’s put together. There’s a project on GitHub if you’re curious.

But basically, it’s exactly what it looks like here. You have a directory, a little bit of JavaScript. You put your Swift in there, you add some libraries you need. It helps to have Docker to make it a bit easier to use and test on the mac.

And there you go.

And then you can get something like this.

Alexis: Echo, ask Rochefoucauld to soothe my soul.

Rochefoucauld: Usually, we only praise to be praised.

Alexis: Echo, ask Rochefoucauld to speak to my condition.

Rochefoucauld: It is easier to govern others than to prevent being governed.

So, to sum up, what to make of this?

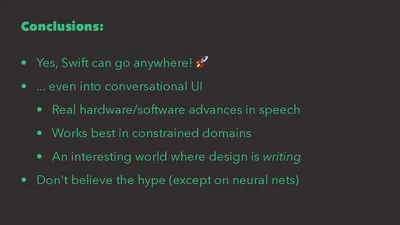

I’d say one conclusion is yes, Swift can go anywhere. Swift’s awesome. Because you can use the same code base to define a conversation that’s going to run in an Alexa function, in a Lambda function to drive an Alexa custom skill, as you use on iOS. You can use literally the same code everywhere, and that’s fantastic.

But what’s going on at large? What does conversational UI mean?

While there are real advances in speech recognition and speech synthesis, but the stuff around actual processing language only seems to work in very constrained domain. So I think the best we can do as developers is to program using good speech interfaces, but being very careful to word things so that we’re just working within constrained domains, while thinking carefully about designing personality and the character.

In general, while there’s like a lot of hype in the news about AI becoming miraculous so that we can talk to a computer soon, don’t believe the hype. If you dig into it, there’s usually a kernel of truth to it, but it gets magnified and generalized on its way to the larger media.

But I still think it’s very exciting, and I think it’s a fun thing to play with!

Thanks to other people who helped me think about this, especially the gentleman who ported ELIZA to Objective-C once upon a time, and to the IBM team, whose Kitura stuff was very helpful. Thank you all very much!